Data sprawl, privacy breach risk and increased compliance regulations are top of mind for most companies right now. Keeping up with data privacy legislation and being prepared to comply must be a priority for security teams.

“The rate of change is overtaking the capacity to respond and maintain data privacy compliance,” according to Security Intelligence. Organizations that take a proactive approach to compliance “utilize automation tools to simplify and streamline data risk assessments, protection and breach response.” Using a scalable data privacy management tool can help you locate data and reduce your sensitive data footprint.

As your company collects increasing amounts of sensitive data, it is important to be aware of the location of that data. When sensitive data is not monitored, it can be mishandled—leaving your company vulnerable to a breach.

Committing to sensitive data footprint reduction allows your company to discover the location of its structured and unstructured data where it lives, so you can better organize and secure it. Here’s how to select a scalable sensitive data reduction solution that can grow with your business.

What to consider when choosing a scalable data privacy management solution

When your organization is choosing software to manage its data privacy, there are many factors to consider, including several that can affect scalability.

1. Don’t sacrifice accuracy for speed

Traditional data discovery scans can take a long time to yield accurate results. Under pressure to save time, some data and compliance professionals will trust a tool that moves faster, but may sacrifice accuracy, omitting certain locations and missing context.

Design choices that value speed over accuracy don’t result in thorough data discovery as they may yield false positives – or even worse, false negatives – leaving gaps in location coverage. This fails to provide the depth of information required to understand the context of the data found, resulting in concerns over accurate compliance with privacy regulations.

2. Choose a hybrid of agent-based and centralized scanning

One question information security professionals might wrestle with in selecting a tool for data privacy management is whether to choose an agent-based or agentless (centralized) solution.

Some sensitive data discovery tools offer agentless scanning, where all data is read by a single centralized deployment of cloud-based software. While this centralized approach can be simpler to deploy and maintain, it may have limitations and performance issues, including:

- Network contention and congestion on internal LANs, across firewalls, and during transit of Internet connections.

- Consumption of excessive Internet bandwidth needed by other critical functions like off-site backups and other SaaS applications.

- Significant cloud repository egress fees may be incurred when data is scanned in a cloud repository without using an agent within or directly adjacent to the cloud repository.

A hybrid of agent-based and centralized scanning often provides the optimal combination of flexibility and scalability for a data privacy management solution. A hybrid solution might deploy agents where necessary while still providing robust centralized, automated, and scalable agentless scanning options for consistently high performance and comprehensive data discovery.

3. Keep in mind bandwidth, capacity, and contention

Bandwidth and capacity are also a concern for managing data privacy, due to the growing volume of sensitive data that requires discovery. To compensate, keeping the scanning software or agent as close to the data as possible can reduce bandwidth requirements and avoid contention with other users.

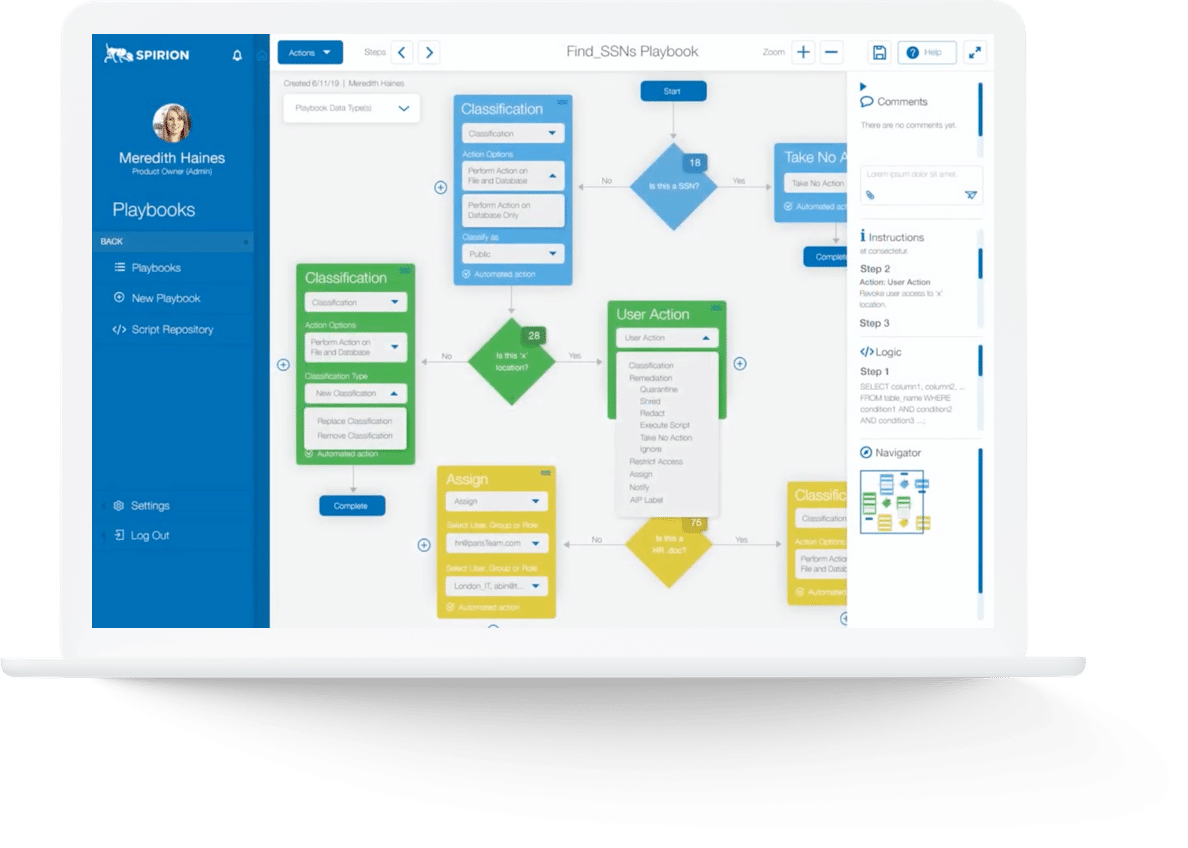

Scalability and performance with Spirion’s Sensitive Data Platform

Spirion’s Sensitive Data Platform (SDP) provides the most appropriate scanning method for the task at hand to avoid compromises and maximize the total ROI. Local agents can be deployed directly on servers and PCs where advantageous. Meanwhile, cloud agents can be used to scan both repositories and/or a combination of cloud and on-premises locations.

On-prem agents

On-prem agents are usually deployed to on-premises workstations, PCs, servers, or other local platforms. They use local compute resources and the high bandwidth/low contention storage busses connecting disk drives to the server or PC they are running on. Because only the scan results are returned to the Sensitive Data Platform Console, on-prem agents greatly reduce network bandwidth and content issues.

Cloud Agents

Cloud agents, running on the Azure platform, work from a shared global search history, so they are aware of what other agents have already scanned. This eliminates duplicate rescanning while ensuring a complete and thorough scan.

Cloud agents can be automatically added or destroyed, and groups of cloud agents can be launched as an Agent Team wherever needed. This offers time and cost efficiencies with no pre-configuration overhead or dedicated hardware requirements.

Cloud Watcher

Cloud Watcher is similar to a cloud agent, but rather than performing scans on demand or based on a preset schedule, Cloud Watcher starts a new scan by a cloud agent whenever a cloud repository API indicates the presence of new or changed data. This capability improves the performance and scheduling impact of scanning for new or changed data by only scanning when necessary.

Agent teams

By combining sets of cloud agents or on-prem agents into an agent team, Spirion SDP breaks the work into small segments, allowing for distributed or parallelized scanning that reduces overall scan times. Agent teams are fully automated and highly scalable – you simply select a set of agents to use and SDP figures out how to divide and distribute the scan process across them, sharing a global scan history to ensure that no work is duplicated. As additional agents configured for the scan become available, they check a queue for the next available scan portion and begin scanning immediately.

Spirion SDP offers the choice to deploy agents where necessary, while still providing robust centralized, automated, and highly scalable agentless scanning options for consistently high performance and comprehensive discovery.